"Last week, I jokingly asked a health club acquaintance whether he would change his mind about his choice for president if presented with sufficient facts that contradicted his present beliefs. He responded with utter confidence. "Absolutely not," he said. "No new facts will change my mind because I know that these facts are correct."

"Last week, I jokingly asked a health club acquaintance whether he would change his mind about his choice for president if presented with sufficient facts that contradicted his present beliefs. He responded with utter confidence. "Absolutely not," he said. "No new facts will change my mind because I know that these facts are correct."I was floored. In his brief rebuttal, he blindly demonstrated overconfidence in his own ideas and the inability to consider how new facts might alter a presently cherished opinion. Worse, he seemed unaware of how irrational his response might appear to others. It's clear, I thought, that carefully constructed arguments and presentation of irrefutable evidence will not change this man's mind.

In the current presidential election, a major percentage of voters are already committed to "their candidate"; new arguments and evidence fall on deaf ears. And yet, if we, as a country, truly want change, we must be open-minded, flexible and willing to revise our opinions when new evidence warrants it. Most important, we must be able to recognize and acknowledge when we are wrong. Unfortunately, cognitive science offers some fairly sobering observations about our ability to judge ourselves and others.

Perhaps the single academic study most germane to the present election is the 1999 psychology paper by David Dunning and Justin Kruger, "Unskilled and Unaware of It: How Difficulties in Recognizing One's Own Incompetence Lead to Inflated Self-Assessments." The two Cornell psychologists began with the following assumptions.

- Incompetent individuals tend to overestimate their own level of skill.

- Incompetent individuals fail to recognize genuine skill in others.

- Incompetent individuals fail to recognize the extremity of their inadequacy.

On average, participants placed themselves in the 66th percentile, revealing that most of us tend to overestimate our skills somewhat. But those in the bottom 25 percent consistently overestimated their ability to the greatest extent. For example, in the logical reasoning section, individuals who scored in the 12th percentile believed that their general reasoning abilities fell at the 68th percentile, and that their overall scores would be in the 62nd percentile. The authors point out that the problem was not primarily underestimating how others had done; those in the bottom quartile overestimated the number of their correct answers by nearly 50 percent. Similarly, after seeing the answers of the best performers -- those in the top quartile -- those in the bottom quartile continued to believe that they had performed well.

The article's conclusion should be posted as a caveat under every political speech of those seeking office. And it should serve as the epitaph for the Bush administration: "People who lack the knowledge or wisdom to perform well are often unaware of this fact. That is, the same incompetence that leads them to make wrong choices also deprives them of the savvy necessary to recognize competence, be it their own or anyone else's."

The converse also bears repeating. Despite the fact that students in the top quartile fairly accurately estimated how well they did, they also tended to overestimate the performance of others. In short, smart people tend to believe that everyone else "gets it." Incompetent people display both an increasing tendency to overestimate their cognitive abilities and a belief that they are smarter than the majority of those demonstrably sharper.

Closely allied with this unshakable self-confidence in one's decisions is a second separate aspect of meta-cognition, the feeling of being right. As I have pointed out in my recent book, "On Being Certain," feelings of conviction, certainty and other similar states of "knowing what we know" may feel like logical conclusions, but are in fact involuntary mental sensations that function independently of reason. At their most extreme, these are the spontaneous "aha" or "Eureka" sensations that tell you that you have made a major discovery. Lesser forms include gut feelings, hunches and vague intuitions of knowing something, as well as the standard "yes, that's right" feeling that you get when you solve a problem.

The evidence is substantial that these feelings do not correlate with the accuracy or quality of the thought. Indeed, these feelings can occur in the absence of any specific thought, such as with electrical and chemical brain stimulation. They can also occur spontaneously during so-called mystical or spiritual epiphanies in which the affected person senses an immediate William James described this phenomenon as "felt knowledge."

Feelings of absolute certainty and utter conviction are not rational deliberate conclusions; they are involuntary mental sensations generated by the brain. Like other powerful mental states such as love, anger and fear, they are extraordinarily difficult to dislodge through rational arguments. Just as it's nearly impossible to reason with someone who's enraged and combative, refuting or diminishing one's sense of certainty is extraordinarily difficult. Certainty is neither created by nor dispelled by reason.

Similarly, without access to objective evidence, we are terrible at determining whether a candidate is telling us the truth. Most large-scale psychological studies suggest that the average person is incapable of accurately predicting whether someone is lying. In most studies, our abilities to make such predictions, based on facial expressions and body language, are no greater than by chance alone -- hardly a recommendation for choosing a presidential candidate based upon a gut feeling that he or she is honest.

Worse, our ability to assess political candidates is particularly questionable when we have any strong feeling about them. An oft-quoted fMRI study by Emory psychologist Drew Westen illustrates how little conscious reason is involved in political decision-making.

Westen asked staunch party members from both sides to evaluate negative (defamatory) information about their 2004 presidential choice. Areas of the brain (prefrontal cortex) normally engaged during reasoning failed to show increased activation. Instead, the limbic system -- the center for emotional processing -- lit up dramatically. According to Westen, both Republicans and Democrats "reached totally biased conclusions by ignoring information that could not rationally be discounted" (cognitive dissonance).

In other words, we are as bad at judging ourselves as we are at judging others. Most cognitive scientists now believe that the majority of our thoughts originate in the areas of the brain inaccessible to conscious introspection. These beginnings of thoughts arrive in consciousness already colored with inherent bias. No two people see the world alike. Each of our perceptions is filtered through our genetic predispositions, inherent biologic differences and idiosyncratic life experiences. Your red is not my red. These differences extend to the very building blocks of thoughts; each of us will look at any given question from his own predispositions. Thinking may be as idiosyncratic as fingerprints."

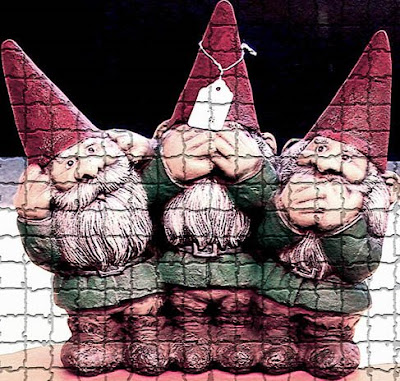

-Robert Burton (Excerpt: "My Candidate, Myself," Salon.com, 9.22.08. Image: See No Evil, Hear No Evil, Speak No Evil: Gnomes, SidelineCreations.com, 2008).

2 comments:

a perfect example of "closed mindedness". which is why we are in the position we're in. nobody wants to listen, because they know it all already.

How Should I Vote?

A Note to Women Voters

Post a Comment